Upper-body Joint Tracking and Analysis

I have worked on several projects related to tracking and analyzing people’s upper-body joints using differnet sensors and leveraging different methods. This section describes the work I completed for each project and the technical skills I acquired and improved on related to joint tracking and analysis.

Feel free to contact me if I can provide value to your projects.

Super Pop VRTM

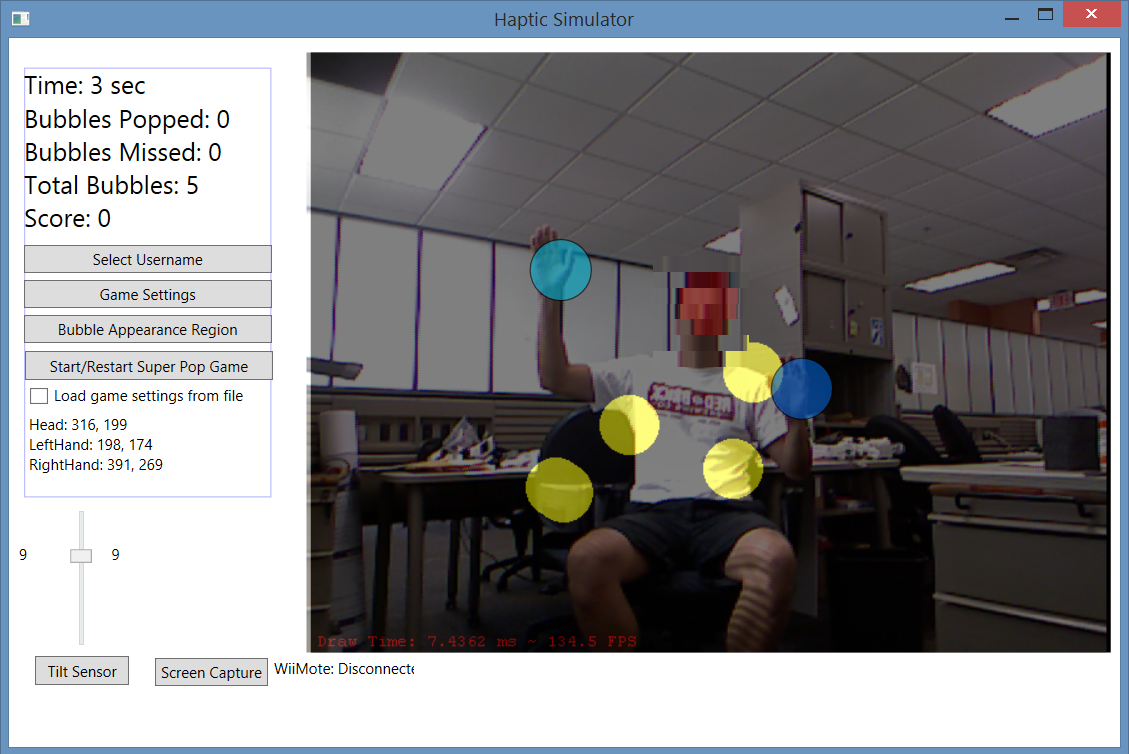

As a PhD student at Georgia Tech and a member of the HumAnS Lab, I developed an adaptable in-home VR game (main interface pictured in the banner of this post) to be used as part of the physical therapy intervention protocols of children who have cerebral palsy.

Under the supervision of my PhD advisor, Dr. Ayanna Howard, I developed kinematic baseline for assessing upper-body motor skills (published article). This baseline, together with the capabilities of the Kinect camera from Microsoft to track the user’s upper-body 3D joint coordinates, allowed for the development of the game that is being valiated in several clinical trials (read more in this published journal article).

The following video generally describes the functionality of the Super Pop VRTM game:

This work was done in collaboration with Dr. Yu-ping Chen. You can read more about this project and its results in my published journal articles and conference papers.

DARwIn-OP

In collaboration with one of my labmates, LaVonda Brown, we explored the use of DARwIn-OP (a.k.a Darwin), a humanoid robot platform used by researchers around the world. We integrated Darwin with the Super Pop VRTM such that it could provide verbal and non-verbal feedback to the users playing the game.

The following image shows an example of Darwin demonstrating the movement sequence the users should be mimicking. You can read more about this work in this published journal article.

![]()

OpenPose Library

While consulting for Cosmos Robotics, we explored using OpenPose, a library developed by the Perceptual Computing Lab at Carnegie Mellon University to detect and track human body, hand, facial, and foot keypoints on single images in real-time.

Our task was to write software to automatically detect if a person has fallen to the ground. The following video shows a case example of our implementation by leveraging OpenPose’s tracking solutions:

Technical Skills

The following summarizes my proficiency in the technical skills I improved on and acquired while working on the projects described in this post:

| ROS and ROS 2 | |

| C++ | |

| Python | |

| Human-Robot Interaction | |

| C# and Visual Studio | |

| Kinect from Microsoft |